This blog post will walk through the VPC, VPC Peering and Transit VPC.

After Aarthi and I covered Transit VPC at a high-level on this AWS blog post, I thought I would provide expand on the architecture behind Transit VPC.

VPC Recap

Let’s start with a very quick recap of a VPC (Virtual Private Cloud). Essentially, it’s your virtual data center on AWS.

Customers would typically create one (or multiple) VPC(s), define one CIDR and one (or multiple) IP subnet(s) that their AWS services (such as EC2 instances) would pick up an IP address from.

Access to services running a VPC can be secured using AWS Security Groups or Network Access Lists.

A VPC is tied to a region (e.g. Oregon, Frankfurt, Sydney, etc…) but it can spread across multiple Availability Zones within a region.

AWS Users often use many VPCs in their design for segmentation, administration, billing and compliance purposes.

For example, some customers might create:

- VPC1 = Prod, VPC2 = Dev, VPC3 = Test

- VPC1 = HR, VPC2 = R&D, VPC3 = Sales

- VPC1 = ‘Business Unit 1’, VPC2 = ‘Business Unit 2’

- VPC1 = APP1, VPC2 = APP2, VPC3 = APP3

It is not uncommon for me to come across customers with tens, if not hundreds of VPCs. And it can get messy to monitor and especially to interconnect them.

And this is what the Transit VPC and the Transit Gateway are for. But first let’s talk about VPC Peering. If you’re very familiar with VPC Peering, you can scroll down to the next section “What is AWS Transit VPC“.

VPC Peering

The “traditional” way of establishing connectivity between multiple VPCs is with VPC Peering.

Feel free to read the detailed guide here or read my summary below:

A VPC peering connection is a networking connection between two VPCs that enables you to route traffic between them using private IPv4 addresses.

Instances in either VPC can communicate with each other as if they are within the same network. You can create a VPC peering connection between your own VPCs, or with a VPC in another AWS account.

A VPC peering connection is a one to one relationship between two VPCs.

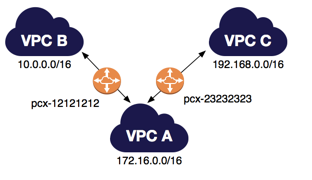

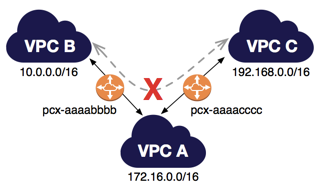

On the picture above, there are two VPC peering connections.

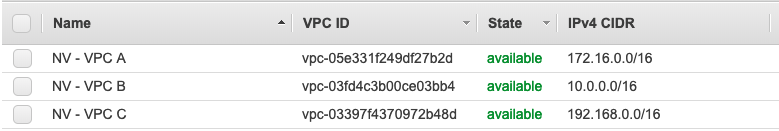

VPC A is peered with both VPC B and VPC C. In my lab, I created VPC A (CIDR 172.16.0.0/16), VPC B (CIDR 10.0.0.0/16) and VPC C (CIDR 192.168.0.0/16) like in the diagram above.

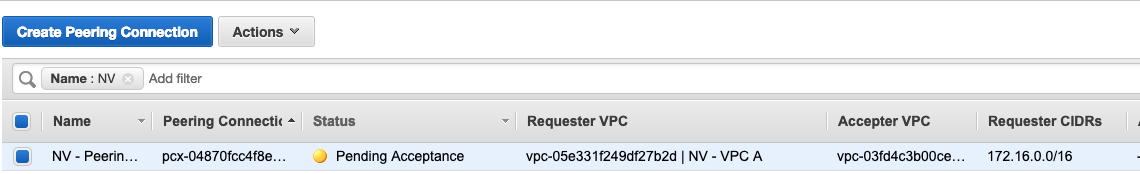

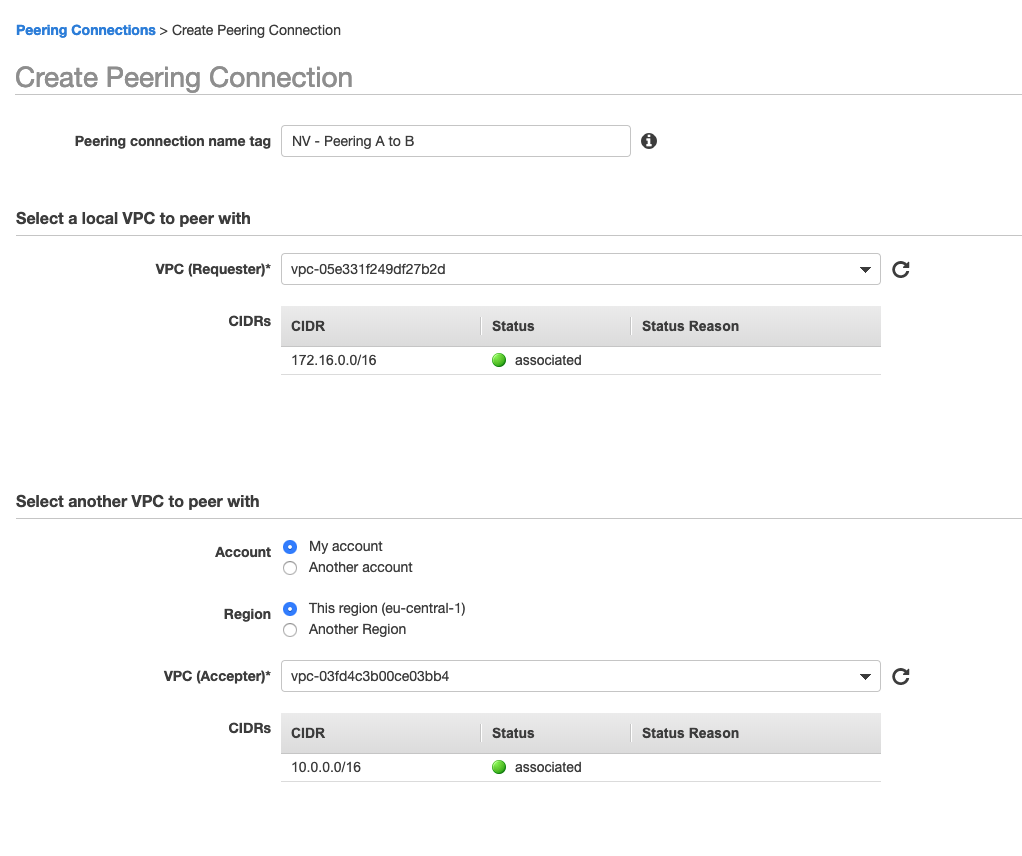

In the VPC Portal on the AWS Console, I then created VPC Peering Connection. It’s super simple. Just pick the two VPCs you want to peer together (“Requester” and “Accepter”). You’ll notice you could peer VPCs in different accounts and in different regions but mine are both in the same account and in the Frankfurt region.

You just have to accept it (AWS’s done this in case somebody in a different account wanted to peer with you and you didn’t fancy peering with that account) and then the peering comes up.

I built two peerings – one from VPC A to VPC B and one from VPC A to VPC C.

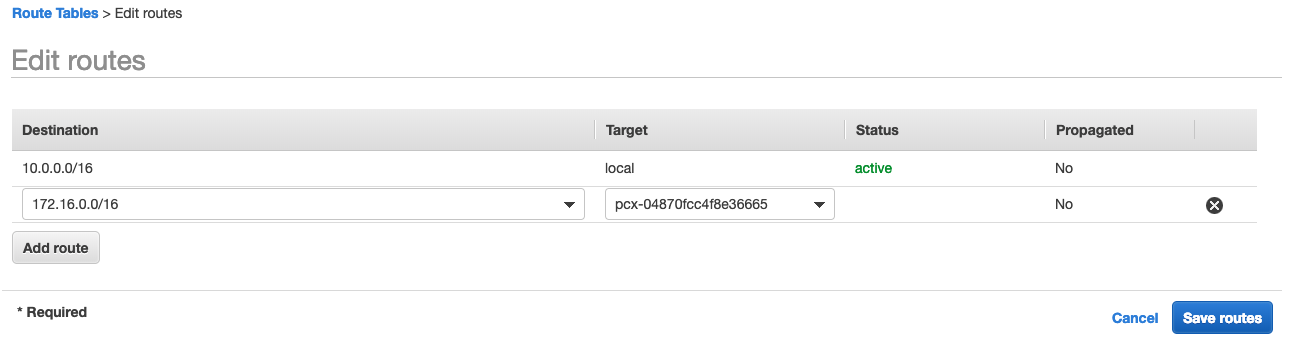

You’re not quite done yet – you need to edit the VPC routing table to tell VPC A how to reach the VPC B CIDR and vice-versa. I also had to do it for VPC A and VPC C.

The “target” in the routing table below would be the Peering Connection you’ve just created (always starts with pcx-). Essentially, you’re just telling the VPC router that, if it sees packets with a destination IP of 10.0.0.0/16, it should send it over the peering connection to VPC B.

I’ve got an EC2 instance in each VPC and EC2-VPCA (172.16.10.16) can now talk to EC2-VPCB (10.0.0.44) and EC2-VPCA can talk to EC2-VPCC (192.168.11.190).

[ec2-user@ip-172-16-10-16 ~]$ ping 192.168.11.190

PING 192.168.11.190 (192.168.11.190) 56(84) bytes of data.

64 bytes from 192.168.11.190: icmp_seq=1 ttl=255 time=0.948 ms

64 bytes from 192.168.11.190: icmp_seq=2 ttl=255 time=3.94 ms

64 bytes from 192.168.11.190: icmp_seq=3 ttl=255 time=0.956 ms

64 bytes from 192.168.11.190: icmp_seq=4 ttl=255 time=1.01 ms

64 bytes from 192.168.11.190: icmp_seq=5 ttl=255 time=1.09 ms

^C

--- 192.168.11.190 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 4004ms

rtt min/avg/max/mdev = 0.948/1.592/3.948/1.179 ms

[ec2-user@ip-172-16-10-16 ~]$ ping 10.0.0.44

PING 10.0.0.44 (10.0.0.44) 56(84) bytes of data.

64 bytes from 10.0.0.44: icmp_seq=1 ttl=255 time=0.858 ms

64 bytes from 10.0.0.44: icmp_seq=2 ttl=255 time=0.898 ms

64 bytes from 10.0.0.44: icmp_seq=3 ttl=255 time=0.886 ms

64 bytes from 10.0.0.44: icmp_seq=4 ttl=255 time=0.925 ms

64 bytes from 10.0.0.44: icmp_seq=5 ttl=255 time=0.879 ms

^C

--- 10.0.0.44 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 4034ms

rtt min/avg/max/mdev = 0.858/0.889/0.925/0.029 msEC2-VPCB and EC2-VPCC though… No luck.

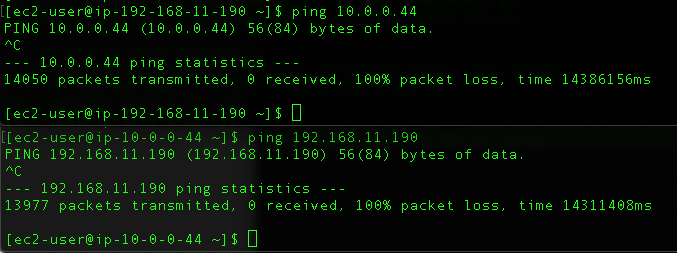

You might have noticed that VPC B and VPC C are not peered, and you cannot use VPC A as a transit point for peering between VPC B and VPC C. Transitive peering relationships are not supported.

What ‘transitive‘ in this sense means is that you cannot go from VPC B to VPC C through VPC A.

If you want to enable routing of traffic between VPC B and VPC C, you must create a unique VPC peering connection between them.

I did just that, I then edited the routing table in both VPCs. You can see in the little video how quickly changes occur on the router. It’s almost immediate – as soon as I complete the routing changes on the VPC C routing table, pings between VPC B and VPC C start flowing.

Why not use VPC Peering then?

Well, as you saw above, to get all VPCs to talk to each other, you need a full mesh of VPC Peerings. For 3 VPCs above, that was easy enough.

But what happens when I had 5 VPCs ? Or 10 VPCs ? Or 30 VPCs ?

If I remember my maths lessons correctly:

To mesh n VPCs together, you would need n*(n-1)/2 peering connections.

This creates huge complexity maintaining and configuring so many VPC Peering Connections at scale. For example:

- A full mesh of 10 VPC peers means 45 peering connections.

- A full mesh of 30 VPC peers mean 435 peering connections.

That’s pretty horrible.

Not only that but VPC Peering only works between AWS VPCs. “VPC Peering” is not based on a standard protocol like BGP or IPSEC.

It’s something an AWS networking wizard/witch came up with in his/her dungeon (no sane person could have come up with AWS networking – it breaks many of the networking rules I’ve learned throughout my career).

You cannot use VPC peering to communicate with on-premises DCs or with other clouds

All the above are reasons why AWS introduced the concept of “Transit VPC” and subsequently “Transit Gateway” to support communications between multiple VPCs.

What is AWS Transit VPC ?

You won’t find “Transit VPC” in the list of AWS Services or in the console anywhere. It’s not actually a product, it’s more of a reference architecture.

It was first announced in August 2016 and in some ways, it has already been superseded by the AWS Transit Gateway (that’s a blog post for another day). But the Transit VPC is widely deployed and has still a lot of great use cases.

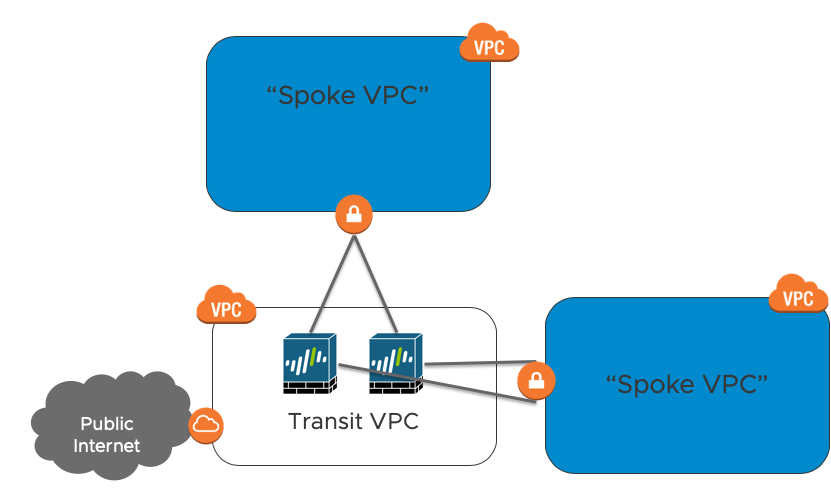

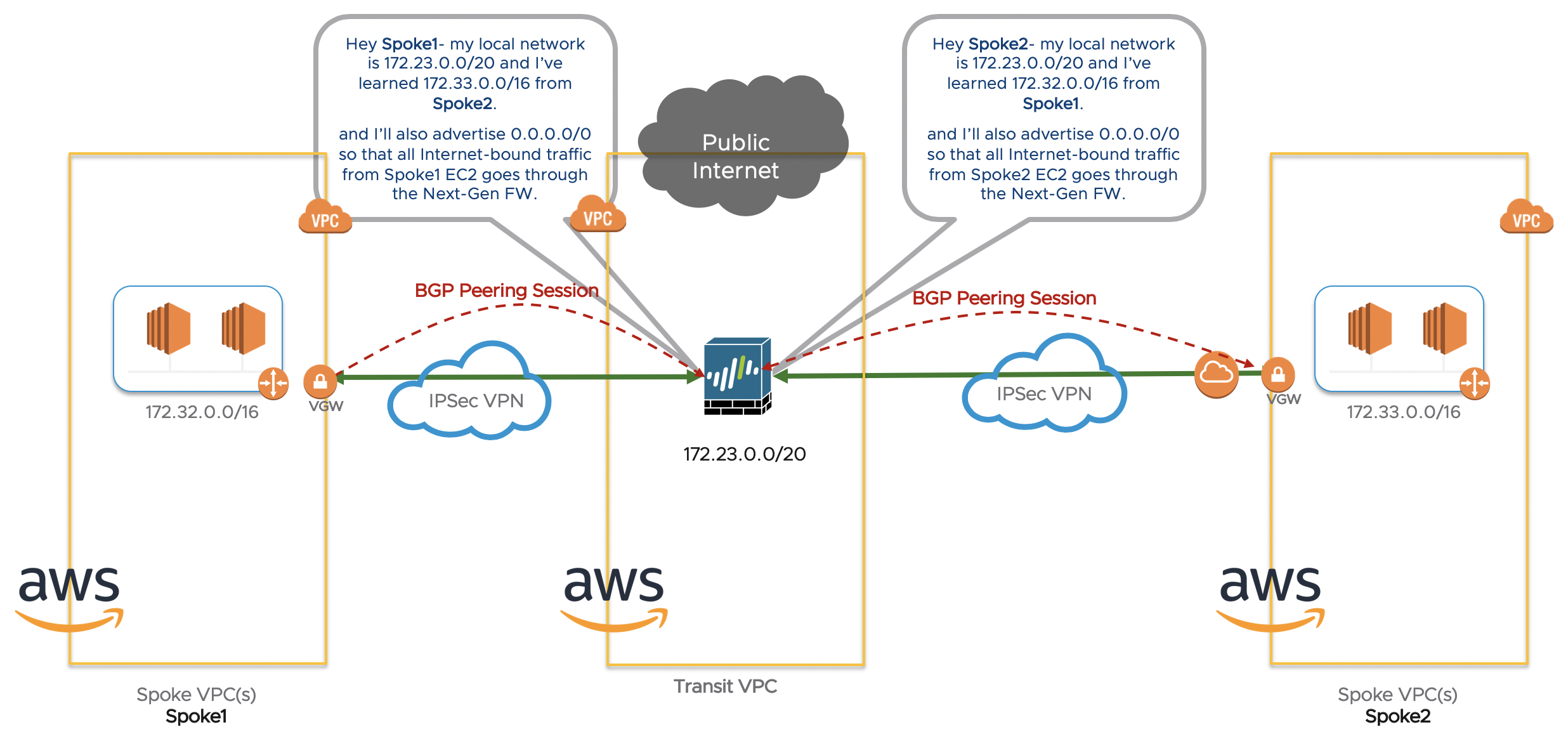

The Transit VPC is based on a hub and spoke architecture.

The Transit VPC is central, surrounded by additional “spoke” VPCs, corporate DCs and other networks.

It leverages traditional IPSec VPN tunnels to interconnect sites together (instead of VPC peering) and we can leverage a Dynamic routing protocol (typically BGP) as well.

One of the main benefits is that we can leverage an IDS/IPS/NGFW for additional security.

The Transit VPC brings the other following benefits:

- Higher-scale

- Familiarity and compatibility (as we can use our existing firewall vendors)

- Firewall Insertion (VPC Peering and the TGW are routers and don’t inspect any of the traffic)

- Encryption everywhere (unlike VPC peering traffic which is sent unencrypted)

AWS Transit VPC Architecture

Throughout my posts, I will use the Palo Alto Next-Gen FW but the same principle should apply to other virtual firewalls such as Cisco CSR or vASA, CheckPoint, Juniper, Fortigate, etc…

Within the Transit VPC is a EC2 instance running a Palo Alto Firewall. You can (and probably should) run multiple PAN EC2 instances.

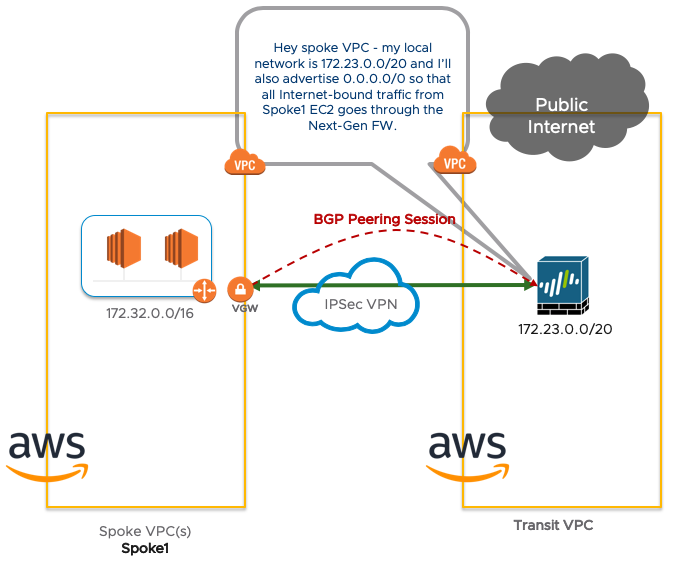

The blue boxes are Spoke VPCs. You can have many of them. There will one or more VPNs between the Spoke VPC VGW (Virtual Gateway) and the Palo Alto FW.

Attached to the Transit VPC is an Internet Gateway (IGW). All spoke VPCs will communicate to the Internet via this IGW. The Palo Alto FW has a default route to the Internet Gateway. The Palo Alto advertises this route (through BGP route redistribution) to the spokes.

In the picture below, we have 1 spoke connected to our transit VPC. The Spoke VPC will advertise its local CIDR to the Transit VPC.

The Transit VPC will advertise the default route, its local CIDR and all other networks learned from other Spokes.

The Spoke1 only has to set up 1 VPN from the AWS Virtual Gateway to the Palo Alto. There is no need to run a Palo Alto FW inside any of the Spoke VPCs.

When you connect another spoke (may it be another VPC like in the picture below, a remote site or a VMware Cloud on AWS SDDC), it’s a fairly straightforward architecture – spoke-to-spoke traffic go through the transit VPC:

When you compare it to the many VPC peerings we had to set up to establish connectivity between many VPCs, you can see how this architecture greatly simplifies things: every time a new VPC comes online, connect it up to the Transit VPC via a VPN tunnel and you’re sorted. No need to constantly configure and maintain a full VPC Peering mesh.

The next post will look at the actual configuration and routing tables of a transit VPC fully configured and what it looks like when connected to VMware Cloud on AWS.

Thanks for reading.

Looking forward to the next post!

LikeLike

Excellent post. Keep posting such kind of info on your page. Im really impressed by your site.

LikeLike

Thanks for the post!

Adding one question Does it possible to established connection from my branch means Meraki MX64 to Vmx to Palo Alto Firewall (Transit VPC) to Oracle cloud infrastructure – OCI ?

Description – (1) I want to advertise branches traffic towards Vmx through auto VPN (Destination should be Vmx)

(2) Vmx to I do have connectivity from PALO Firewall through direct connection (BGP peering). Which has been mapped in Transit VPC.

(3) From Palo Alto I want to build connection towards Oracle cloud as a Spoke.

Does above requirement should be fulfill using above concept ??

LikeLike