Cluster Mesh is a pretty cool feature and not something I had played much with until I joined Isovalent. It enables users to connect clusters together with a single command line, regardless of the underlying Kubernetes platform it’s running on.

It can be used in multi-cloud connectivity scenarios, for DR use cases or it can be used for load-balancing.

What we had – up until version 1.12 – is the ability to balance traffic across Kubernetes Services spread across multiple clusters.

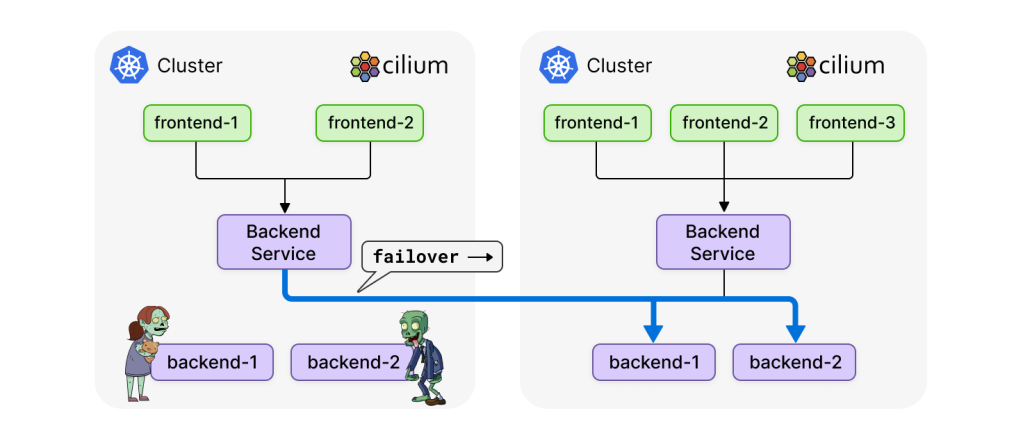

By Service, I mean the Virtual IP that faces Pods (backend-1 and backend-2 in the pictures below). I’ll always think of Service as a Load Balancer VIP and backends as “real server” or “server pool” – the terminology I learned in my networking days.

Anyway – by default, the traffic will be equally shared across the clusters (assuming they are healthy (i.e. respond correctly to liveness checks) of course).

What is new with Cilium 1.12 is the ability to set some affinity.

What this means is that you might not want the traffic to the other cluster, when the local one is working fine. By setting the global service to “local”, the traffic will prefer the local cluster (avoiding the impact of cross cluster traffic and its additional latency).

What if the local backends were to fail ? Well, the traffic would fail over to the remote cluster. It gives you an easy way to achieve distributed/global load balancing across multiple clusters.

If you want to stick to the current model, set the affinity to “None”. If you want to prefer the remote clusters instead of the local ones (it’s unlikely but you might want to do that, if you want to run some maintenance on your local service), you can set the affinity to “Remote”.

I’ll let you watch the video – let me know if you have any feedback!