This post will walk through how to automatically add and remove hosts to your VMware cluster, by leveraging VMware Cloud on AWS SDDC, Python and AWS Lambda.

While we have the very cool Elastic DRS feature that can scale up and down based on resource thresholds, EDRS is not clever enough (yet) to anticipate when things will grow. I have customers that have a clear business pattern and they want their infrastructure capacity aligned to the same schedule.

I remember an IT Manager at a UK bank telling me they were running application development and testing in the Cloud and they wanted to reduce the size of their infrastructure at the end of the week when developers would log off. The applications they ran were stateless so there would not be a loss of data when we would shrunk the cluster down. At the same time, they wanted the infrastructure to be at full scale by the Monday morning.

I also recently came across a use case of a teaching institution in Asia that is looking at VMware Cloud on AWS as a platform to host their teaching and learning labs for their students.

However, like most schools, they have budget constraints so we looked at ways to make the overall solution more cost-effective. One way is to leverage on-demand nodes for periods where we have identified peak and schedule nodes to be prepopulated before the class starts. Once the lesson is over, we will remove hosts from the cluster.

While this is not natively built within VMware Cloud on AWS, this is pretty straight-forward to code and set up, using AWS CloudWatch Event, AWS Lambda and Python (though I should also give some kudos to Gilles for the support). I assume that it’s also possible with the Function-As-A-Service platform VEBA, although I haven’t tested it yet.

Standard disclaimer: I’ve tested it and it worked for me but it’s not officially supported by VMware so use it at your own risk.

As you will see, even users without any knowledge of Python can do it.

It’s fairly simple: we will run a cron job on AWS CloudWatch event to trigger an AWS Lambda function at the time of our choice (ie Monday morning or Friday evening). The Lambda function is a Python-based script that will make an API call to grow/shrink our VMware Cloud on AWS.

The requirements are simple: you will need a VMware Cloud on AWS account, a SDDC deployed and an AWS account. That’s it!

Let’s walk it through:

Tutorial

The walk-through below is to schedule an increase of hosts to your VMware infrastructure (the scale down is the exact same process, with a slightly different code).

First, create a new folder on your desktop. Then go ahead and download the code from GitHub here. There are two Python files (lambda_function.py and config.ini). Move the files to the new folder.

Let’s first review the Python Code. There is only one thing you need to change in this file: the number of hosts you want to add or remove.

Look at the 3 on line 53: in this instance, I’ll be scheduling the addition of 3 hosts to my SDDC.

The script essentially makes an API Call to VMware Cloud on AWS to add the requested number of hosts. .

"""

Scheduled Auto-Scale for VMware Cloud on AWS

You can install python 3.6 from https://www.python.org/downloads/windows/

You can install the dependent python packages locally (handy for Lambda) with:

pip install requests -t . --upgrade

pip install configparser -t . --upgrade

"""

import requests # need this for Get/Post/Delete

import configparser # parsing config file

import time

import json

config = configparser.ConfigParser()

config.read("./config.ini")

strProdURL = config.get("vmcConfig", "strProdURL")

strCSPProdURL = config.get("vmcConfig", "strCSPProdURL")

Refresh_Token = config.get("vmcConfig", "refresh_Token")

ORG_ID = config.get("vmcConfig", "org_id")

SDDC_ID = config.get("vmcConfig", "sddc_id")

print("The SDDC " + str(SDDC_ID) + " in the " + str(ORG_ID) + " ORG will be scaled up.")

def getAccessToken(myKey):

params = {'refresh_token': myKey}

headers = {'Content-Type': 'application/json'}

response = requests.post('https://console.cloud.vmware.com/csp/gateway/am/api/auth/api-tokens/authorize', params=params, headers=headers)

jsonResponse = response.json()

access_token = jsonResponse['access_token']

return access_token

def addCDChosts(hosts, org_id, sddc_id, sessiontoken):

myHeader = {'csp-auth-token': sessiontoken}

myURL = strProdURL + "/vmc/api/orgs/" + org_id + "/sddcs/" + sddc_id + "/esxs"

strRequest = {"num_hosts": hosts}

response = requests.post(myURL, json=strRequest, headers=myHeader)

print(str(hosts) + " host(s) have been added to the SDDC")

print(response)

return

# --------------------------------------------

# ---------------- Main ----------------------

# --------------------------------------------

def lambda_handler(event, context):

session_token = getAccessToken(Refresh_Token)

addCDChosts(3, ORG_ID, SDDC_ID, session_token)

return

When the code is executed, the following logs will appear:

nvibert-a01:Schedule SDDC - Scale Up nicolasvibert$ python lambda_function.py

The SDDC fa817adc-6ef0-4dc6-8ff0-XXXX in the 84e84f83-bb0e-4e12-9fe0-XXXXXXXX ORG will be scaled up.

3 host(s) have been added to the SDDC

<Response [202]>

The other file you need to edit is config.ini.

[vmcConfig]

strProdURL = https://vmc.vmware.com

strCSPProdURL = https://console.cloud.vmware.com

refresh_Token = XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

org_id = XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

sddc_id = XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

The Org and SDDC ID can be found in the Support Tab of your VMware Cloud on AWS SDDC.

For the Refresh token, you also need to go on the VMC console to generate it. Follow the instructions here to do so. Update the config.ini file with the details above.

Last thing we need to do before we zip up the file and upload it to AWS Lambda is run the following commands :

pip install requests -t . --upgrade

pip install configparser -t . --upgrade

This will install and download the required Python packages.

Zip up the entire folder. The instructions here can help if needed.

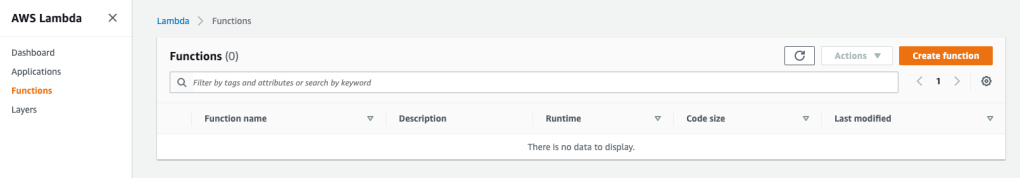

Next, go the AWS console. Log on to AWS Lambda. Create a Function.

Author a function from scratch. Give the function a name (such as “Scale_Up_SDDC”) and select Python as the “Runtime”. I tested with Python 3.8 and 2.7.

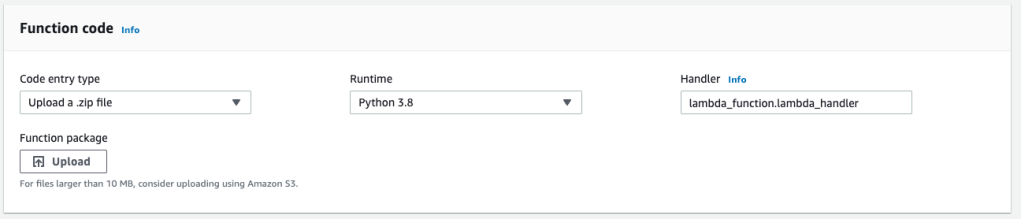

Now, you will have an empty function. You will upload the Python script in the Zip we did earlier.

Upload the ZIP. This includes the entire function and required packages.

Now we have a function that will add some hosts but we need an event to trigger its execution. In our case, we will use an AWS CloudWatch Event cron job to run it. Click on “Add trigger” and select “CloudWatch Events/EventBridge”.

Give the Rule a name and select “Schedule expression”. We will define the “cron” expression in that field.

So the following cron expression will trigger the event every Monday at 6am. You can customize your schedule by following the samples here.

cron(0 6 ? * MON *)

Assuming you had left “Enable trigger” above when you created the cron job, your AWS Lambda function is ready!

Once the event is triggered, you can click on Monitoring and you can see the functions executed. I validated with a ‘rate’ type of cron job (rate(10 minutes)) where it would run the command every 10 minutes instead of doing on a specific day.

You can also see in the logs on CloudWatch Log Groups the logs generated by the Python script:

I validated it with my VMware Cloud on AWS SDDC and I could see my nodes scaling up and down as expected.

To scale your SDDC back down, you can use the following Python script (GitHub) and follow the same method to schedule when you want your SDDC to shrink.

I hope you found this post useful. Thanks for reading.

One thought on “Scheduled Cluster Auto-Scale with VMware Cloud on AWS and AWS Lambda”